What if you could have a second brain — not a sci-fi chip in your head, but an AI app that remembers and organizes everything you say throughout the day? That’s exactly what TwinMind, a startup founded by three former Google X researchers, is building. The company recently secured $5.7 million in seed funding, rolled out an Android version of its app, and introduced a new AI-powered speech model called Ear-3.3

A Background Listener That Builds Your Personal Knowledge Graph

TwinMind runs quietly in the background, capturing ambient speech with user permission. Every conversation — whether it’s a meeting, lecture, or casual discussion — gets converted into structured notes, tasks, and contextual insights.

Key features include:

- On-device transcription: Works offline and in real time, without sending audio to the cloud.

- Continuous listening: Runs for 16–17 hours without draining the phone’s battery.

- Multilingual support: Real-time translation across 100+ languages.

- Privacy-first design: Audio recordings are deleted instantly, with only transcribed text stored locally.

Unlike tools such as Otter, Fireflies, or Granola, TwinMind doesn’t just capture meeting notes. It passively listens all day — thanks to a custom Swift-based service that bypasses iOS background restrictions.

The Founders’ Journey

TwinMind was co-founded in March 2024 by Daniel George (CEO), along with former Google X colleagues Sunny Tang and Mahi Karim (both CTOs).

The idea was born when George, then at JPMorgan, created a script to record and transcribe his meetings. Feeding transcripts into ChatGPT, he found that the AI could understand his projects and even generate usable code. That proof of concept evolved into TwinMind — an app designed for personal devices rather than restrictive work laptops.

Before TwinMind, George worked at Google X on projects like iyO (AI-powered earbuds) and had an academic background in gravitational wave astrophysics and AI research with Stephen Wolfram. Wolfram even wrote the first check for TwinMind, marking his debut as a startup investor.

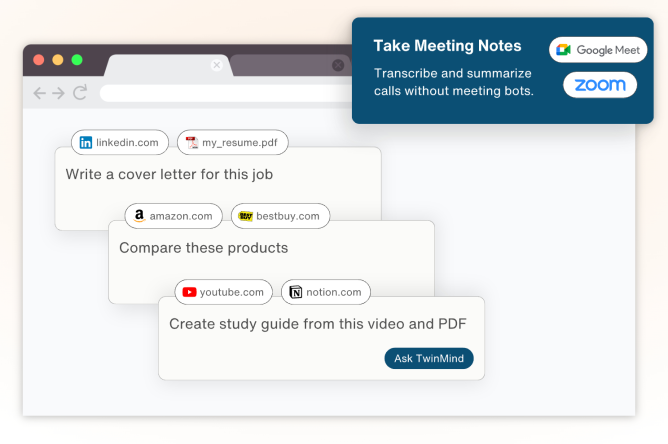

Chrome Extension + Visual Context

Beyond mobile, TwinMind offers a Chrome extension that scans browser tabs, emails, Slack, and Notion pages to add contextual knowledge. This gives the app a unique edge over competitors like ChatGPT, Claude, Perplexity, or Arc browsers, which lack integration with offline conversations and in-person interactions.

Growth and Global Reach

- Users: 30,000+ (with ~15,000 monthly actives).

- Adoption: Strongest in the U.S., with rising traction in India, Brazil, the Philippines, Ethiopia, Kenya, and Europe.

- Demographics:

- 50–60% professionals

- 25% students

- 20–25% personal users (including George’s father, who uses it to write his autobiography).

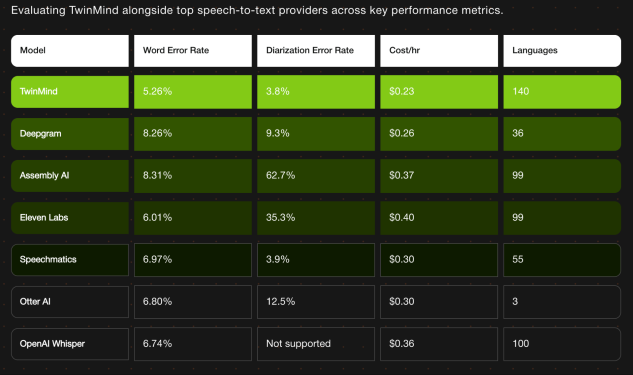

TwinMind Ear-3 Model

The newly released Ear-3 speech model is a leap forward:

- Supports 140+ languages.

- Achieves 5.26% word error rate.

- Speaker recognition with just 3.8% diarization error rate.

- Available via API at $0.23/hour, targeting developers and enterprises.

While Ear-2 supports full offline use, Ear-3 is cloud-based. The app seamlessly switches between them depending on internet connectivity.

Pricing & Subscriptions

- Free Plan: Unlimited hours of transcription and on-device speech recognition.

- Pro Plan ($15/month):

- Up to 2M token context window.

- Priority email support (within 24 hours).

What’s Next for TwinMind?

With an 11-person team, TwinMind plans to:

- Expand its design team to improve UX.

- Build a business development function for its API.

- Invest in user acquisition campaigns.

TwinMind is positioning itself as more than just a meeting assistant. By merging ambient listening, privacy-first AI processing, and multimodal context gathering, it aims to be a true second brain for professionals, students, and everyday users worldwide.

![Case Study: How We Helped [Client] Scale with a Custom Mobile App](https://uxdlab.com/wp-content/uploads/2025/08/case.png)